Mohammed Shabaj Ahmed

Embedded Software Engineer

Socially Assistive Robot for Habit Formation

2019-08-14

Figure 1: Showing how participants were requested to position the hardware.

This project explores how social robots can support long-term behaviour change in health contexts. Developed during my PhD, it investigates whether daily interactions with a socially assistive robot (SAR) can help users form healthy habits. The full codebase and documentation are available below.

Explore the Full Project

View the complete project on GitHubProject Overview

This research examines the psychological and behavioural effects of interacting with a SAR for three weeks and how SARs can encourage positive behaviour changes through habit formation, in an ecologically valid setting. The study is structured around:

- A daily check-in that is based on motivational interviewing principles and uses decision tree driven dialogue.

- A microservice architecture that ensures scalability and modularity.

- A state-driven control system using finite state machines (FSM) and behaviour trees. ∏

Interaction design

During the three-week study, participants interact with the SAR twice daily. These interactions were designed to reinforce behaviour through repetition and reflection:

- Morning Reminder: The robot provides a brief motivational prompt based on the participant's implementation intention.

- Evening Check-In: A 3–5 minute structured dialogue based on motivational interviewing principles to track progress, address challenges, and encourage reflection. A decision tree controls the conversational flow, adapting questions based on responses.

Study Design and Experimental Conditions

This study employed a three-week between-subjects design to explore the role of socially assistive robots (SARs) in habit formation, comparing two conditions: robot-assisted and screen-only. Participants chose an exercise-related behaviour to develop into a habit and performed it in response to a daily cue defined through implementation intentions. Participants were fully informed that the technology aimed to influence their behaviour. The primary variable of interest was the SAR's presence and its influence on habit formation.

In this study, the robot was not there to guide a participant through the process of performing a behaviour, nor was it there to cue a behaviour. Instead, the robot was present to provide a consistent reminder and self-reflection to participants to perform the behaviour, and the physical embodiment enabled the participant to feel like they were having a conversation with a social agent whose expressivity would adjust based on the responses that the participant provided.

Participants were randomly assigned to one of two conditions:

- Robot-assisted Condition: Participants interacted daily with the SAR for reminders and check-ins. The robot provided embodied social interaction through emotional expressivity, conversational engagement, and motivational interviewing techniques.

- Screen-only Condition: Participants interacted daily with an identical dialogue and reminder structure presented through a digital interface without physical embodiment. This condition served as a comparison to isolate the effect of physical embodiment provided by the SAR.

Study Procedure

Figure 2 illustrates the overall study procedure. All participants followed the same initial steps but diverged during the study period into either the robot-assisted or screen-only condition before converging again for the post-study questionnaire and interview.

The study comprised four phases:

- Study qualification: To ensure participants met the study requirements.

- Priming and set up: Introductory session, establishing implementation intentions, and training participants on their assigned interaction method (robot or screen).

- Study period: 21-day period with minimal external interference except necessary communication and system troubleshooting.

- Post study: Final questionnaires and interviews assessing the experience, perceptions, and effectiveness of the intervention.

Figure 2: Visual representation of the study procedure. Participants completed onboarding and priming sessions before being randomly assigned to either the robot-assisted or screen-only condition for the 21-day study period. The two conditions converged again for the final post-study interview and questionnaires.

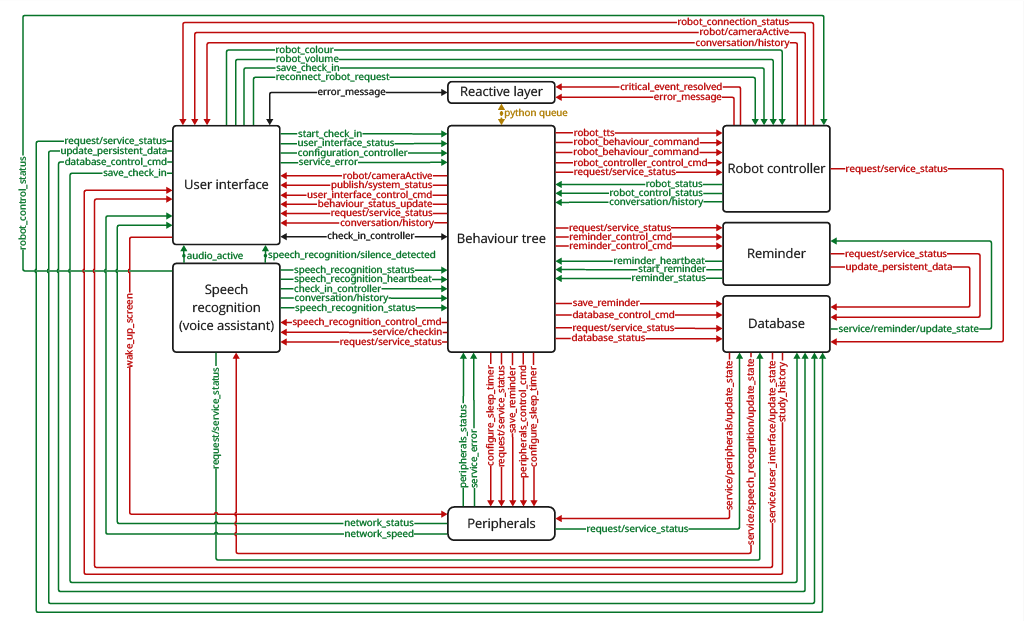

Software Architecture

This project adopts a microservice architecture, where each service is designed to be stateless, storing its configuration and runtime state in a centralised database. Inter-service communication is handled using the Message Queuing Telemetry Transport (MQTT) protocol, which acts as a lightweight message broker supporting event-driven data exchange.

Figure 3 presents an overview of the system architecture, including all active services and the MQTT topics used for communication. This diagram serves to illustrate how loosely coupled services interact to create a cohesive robot behaviour control system.

Figure 3: Overview of the system architecture showing all stateless services and the MQTT messages exchanged between them. Each service communicates through specific topics to maintain a modular and loosely coupled system.

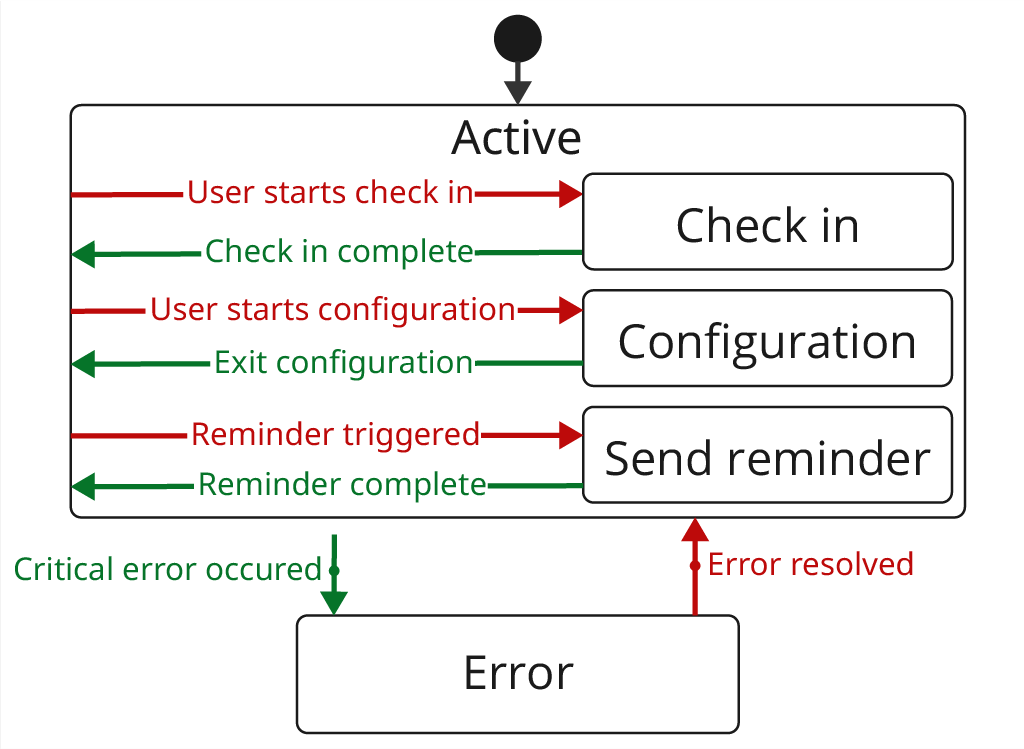

Hybrid State Machine Architecture

An event-driven communication model is employed to ensure the robot responds dynamically to internal and external events. A centralised control system governs the robot's behaviour, implemented as a hybrid state machine that combines reactive and deliberative components:

- Reactive layer: Uses a subsumption architecture to handle critical events (e.g., emergency stops) with priority-based arbitration.

- Deliberative layer: A Finite State Machine (FSM) controls state activation based on high-level goals, and a Behaviour Tree structures more complex behaviours while remaining responsive to new events.

This event-based architecture provides a clear separation between reactive and deliberative actions while ensuring near real-time responsiveness. Figure 4 illustrates the FSM used in the deliberative layer, showing its two high-level states: active and error, and the sub-states that can only be entered from the active state.

Figure 4: Hierarchy of states within the finite state machine (FSM). The system transitions between two high-level states: active and error. From the active state, it can enter sub-states such as interacting, configuring, and reminding.

Advantages of This Approach

- Centralised Decision Making: The state machines act as a central point for decision-making, ensuring that the robot's state is always considered before any action is taken, preventing conflicts.

- Consistency in Behaviour: Ensures predictable and logical interactions. For every state transition, there is a cleanup and setup procedure to manage the transitions safely.

- Stateless: All service states and configurations are stored in a centralised SQL database, ensuring fault tolerance.

- Scalability: New states and complex behaviours are easier to add and manage.

Stateless Services

All services are stateless, and the state is stored in the centralised database. This ensures:

- Resilience: Services can recover from failures without losing critical state.

- Consistency: The robot can resume interactions without losing context.

- Modularity: Independent services can be maintained and updated efficiently.

Communication

MQTT facilitates service-to-service communication. The messaging patterns used in this project were:

- Request-Response: Wait for a response before proceeding, e.g., service commands.

- Fire and Forget: Send a message without waiting for an acknowledgement, e.g., service heartbeat.

Communication Flow

Within individual services, a custom event dispatcher facilitates decoupled communication between the business logic and communication scripts. This dispatcher maintains a dictionary of event handlers keyed by unique event_name identifiers, where the value is the function to be called. Event and associated payload are dispatched synchronously to the registered handler function, ensuring predictable first-in-first-out (FIFO) message handling. This approach separates the core business logic from the messaging layer, providing flexibility to modify the underlying message broker with minimal code refactoring.

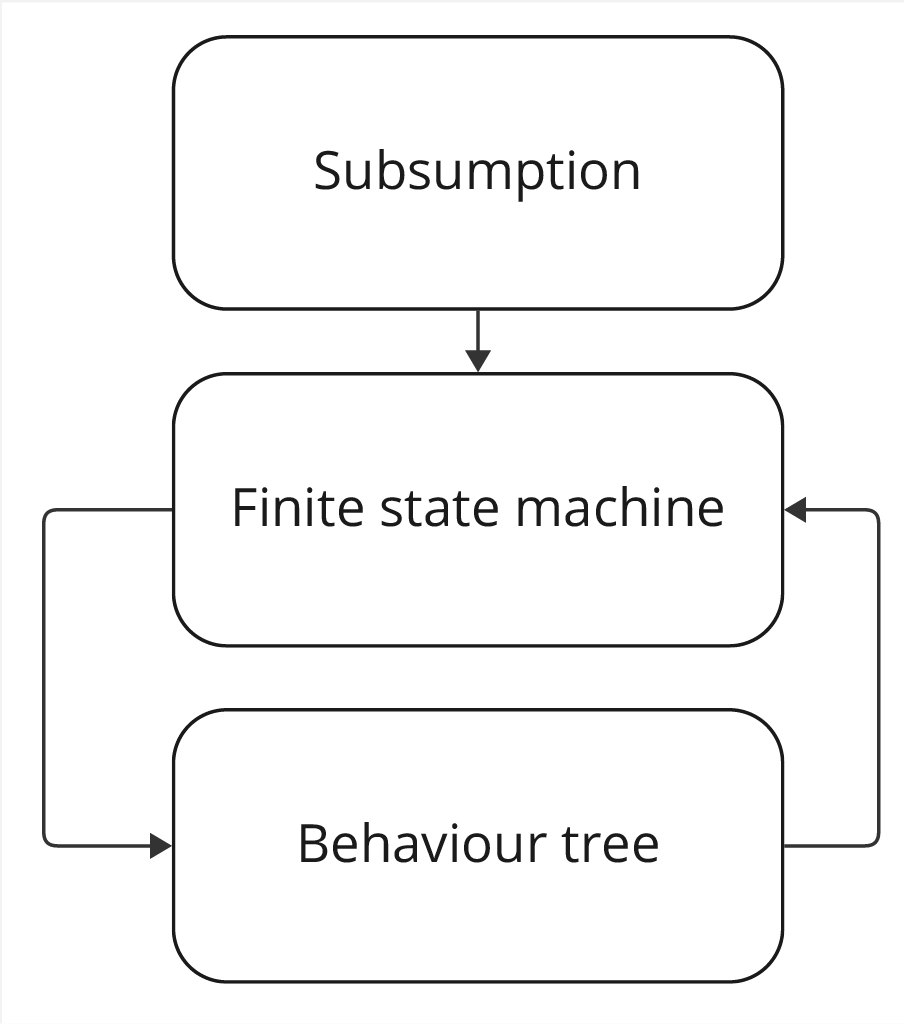

Additionally, Python event queues were used to communicate between state machines. These queues were used to request state transitions. The communication flow is as follows:

- The FSM subscribes to two event queues: one from the subsumption state machine (SS), which publishes priority-based reactive commands, and one from the behaviour tree (BT), which issues user-driven or goal-oriented events.

- The behaviour tree (BT) subscribes to a single queue from the FSM, receiving instructions that guide the execution of structured behaviours.

- FSM listens to incoming events or transition requests from both the SS and BT, processes them in priority order, and issues state transition commands to the BT.

An illustration of this communication hierarchy and flow between the state machine layers is depicted in Figure 5.

Figure 5: Illustration of the communication flow between the subsumption state machine (SS), finite state machine (FSM), and behaviour tree (BT). The FSM subscribes to queues from both SS and BT and manages state transitions accordingly. The BT subscribes only to the FSM to receive high-level behaviour commands.

User Interface

A screen-based user interface was included to increase transparency around the data being collected (DR9) and to provide participants with an additional modality for interacting with the robot beyond voice dialogue. The user interface was implemented as a Web App using Flask and consisted of four main pages: Home, Check-In, History, and Settings. Each screen was designed to support a specific function, such as initiating interactions, tracking progress, or adjusting configuration settings. The interface also provided real-time feedback on the robot's current state and offered users insight into the data being processed.

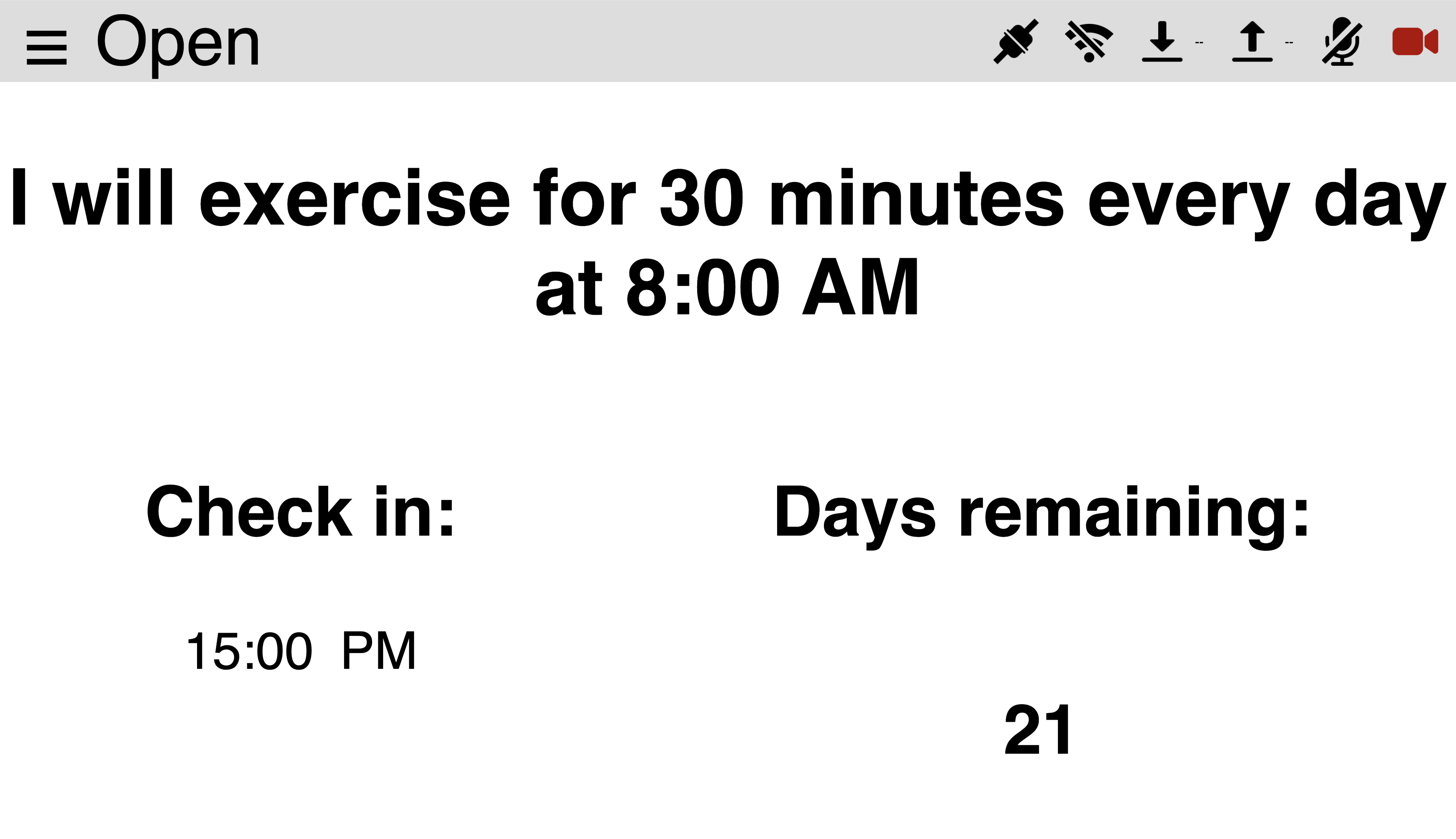

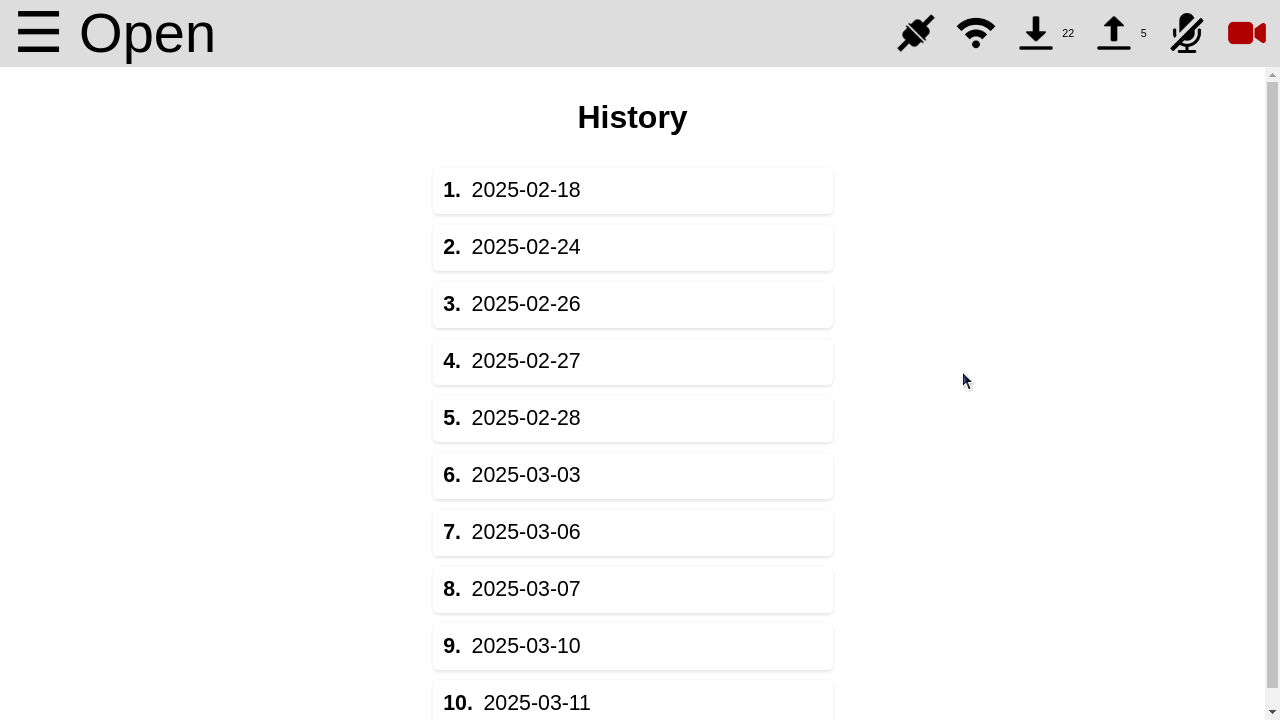

Home

Displays the participant's implementation intention, along with the time of the next scheduled reminder and the number of days remaining in the study (Figure 6.A). To access other screens, participants could click the “Open” button located at the top left corner, which revealed a menu for navigation (Figure 6.B).

Figure 6.A: Home Screen. Figure 6.B: Menu Screen.

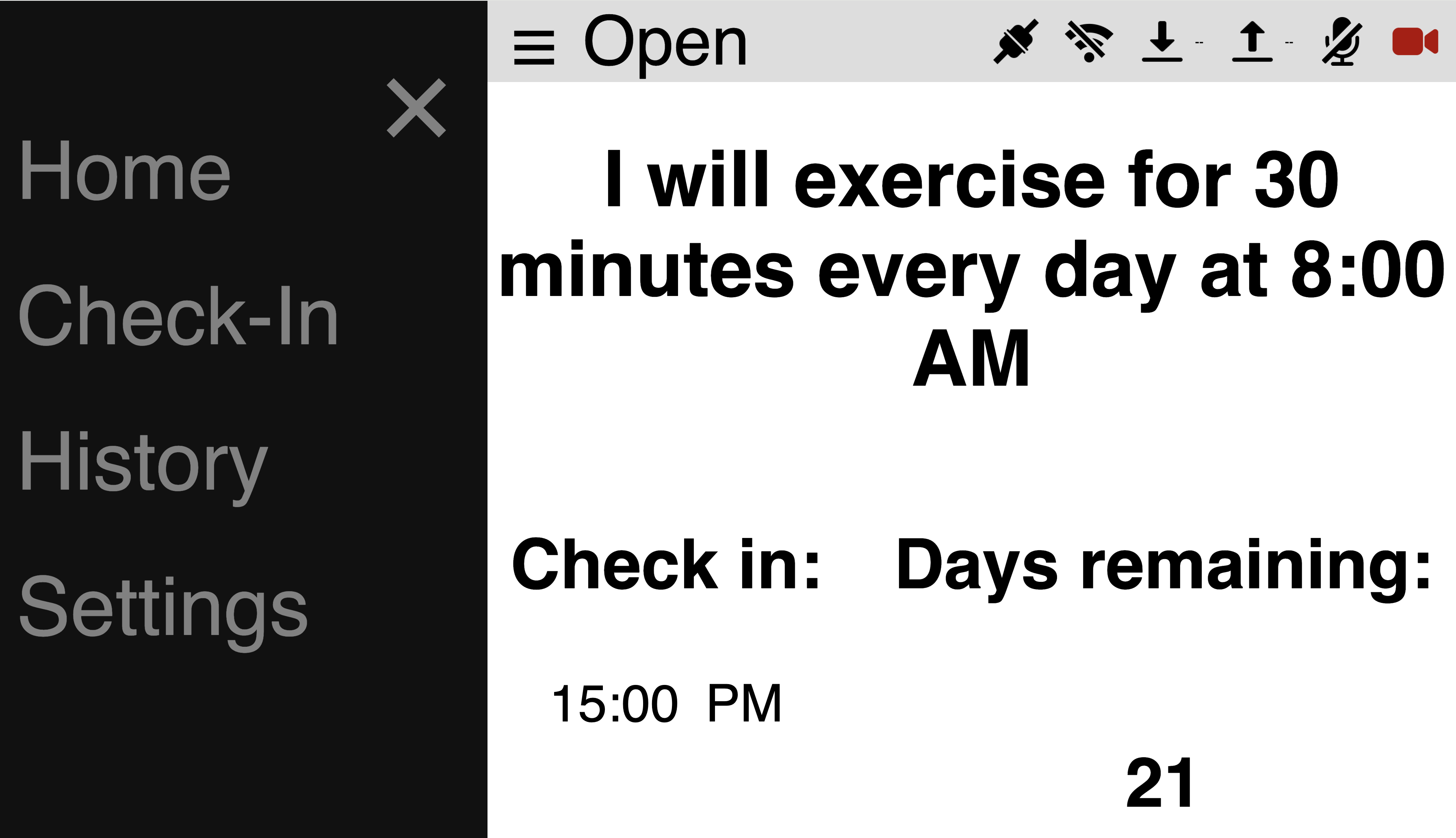

Check-In

To start a check-in, a user must first navigate to the check-in page. A green “Start Check-In” button initiated the dialogue (Figure 7.A). The check-in interface resembled a messaging application, displaying both the robot's questions and the participant's previous responses in a scrollable chat-like format (Figure 7.B). This design helped participants stay oriented during the conversation and review what had already been discussed. Upon completion, participants could choose to save or redo their responses. If no action was taken within 20 seconds, the check-in was saved automatically.

Figure 7.A: Check-in screen. Figure 7.B: Conversation history.

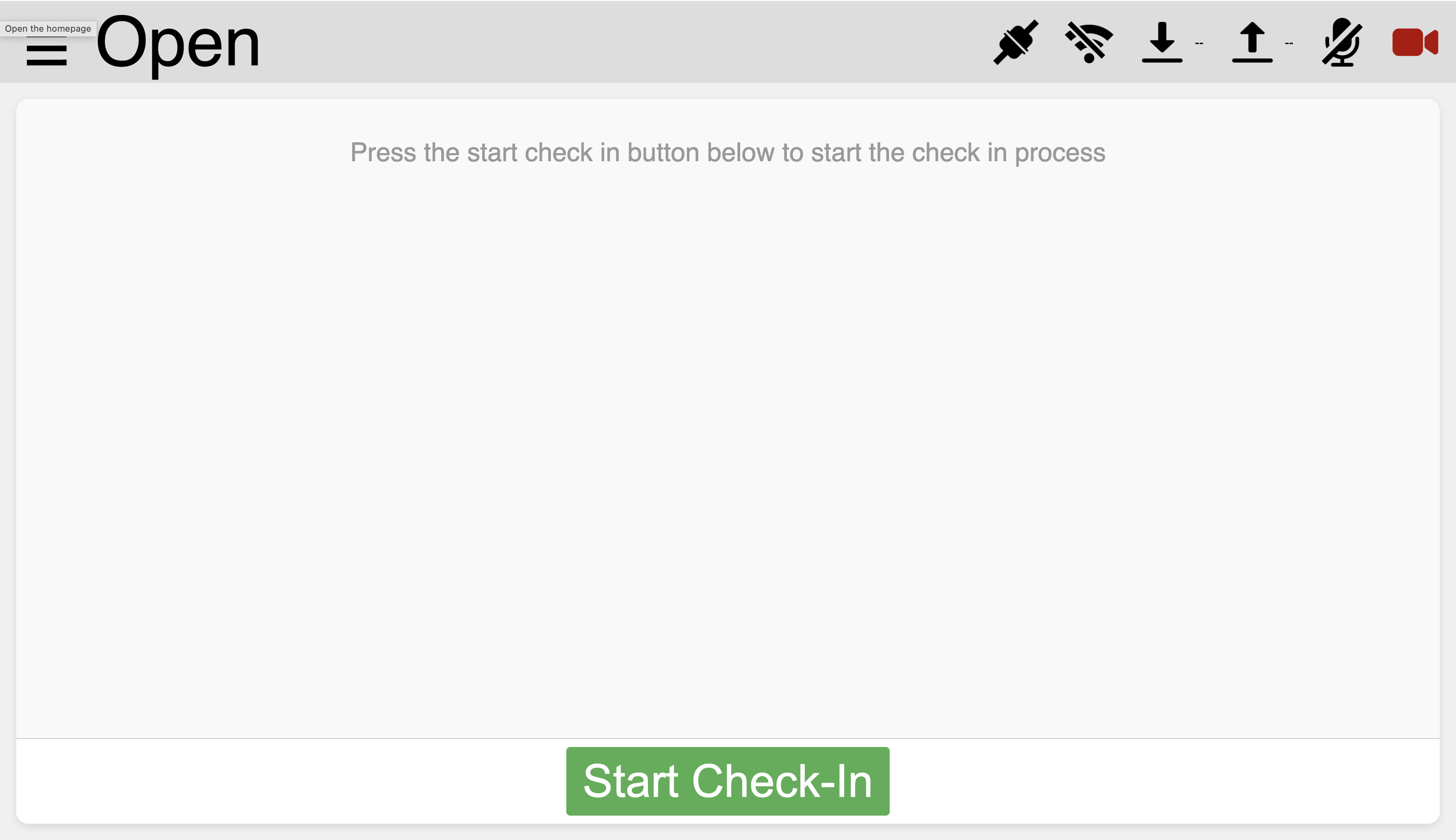

History

The history page allowed participants to view their check-in activity over time. This screen displays the days on which the participant had completed a check-in, providing an overview of adherence to the intervention and the data collected (Figure 8).

Figure 8: History screen.

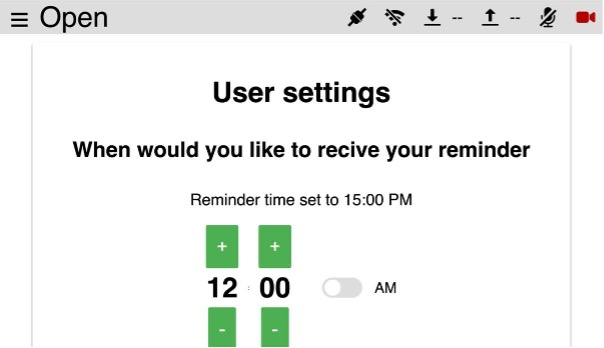

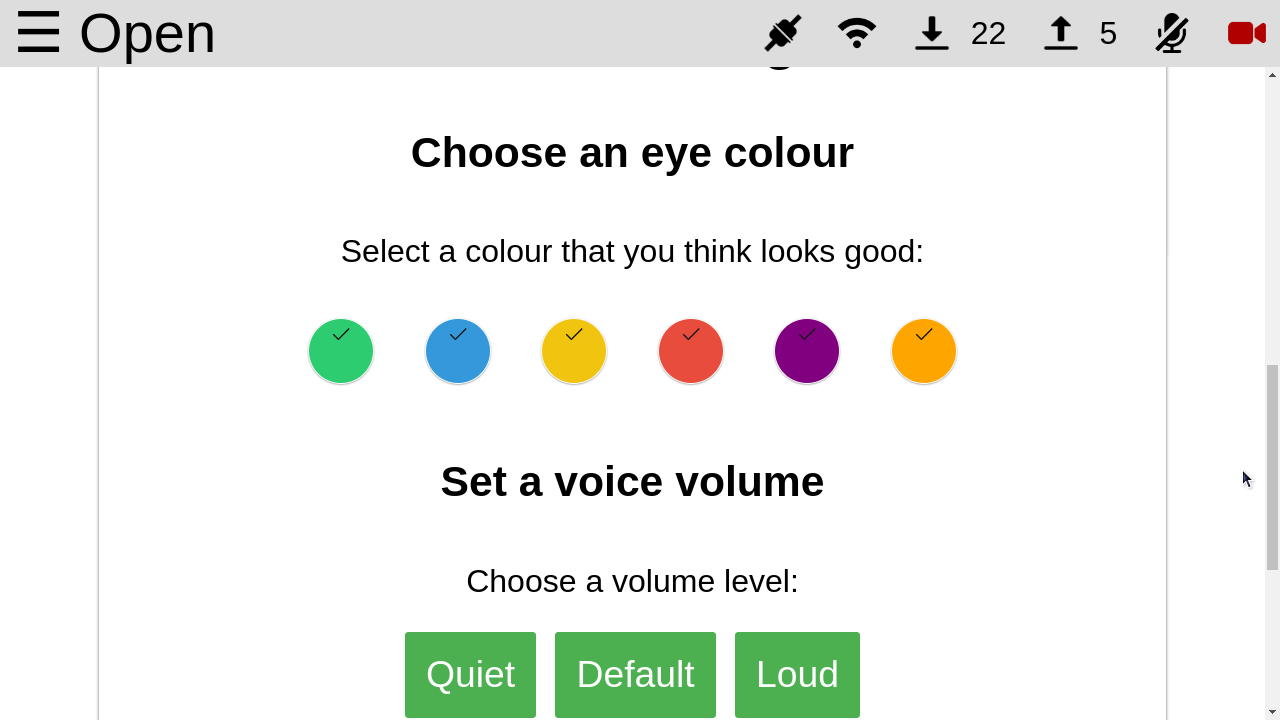

Settings

The settings page provided controls for modifying aspects of the study configurations. This included options for adjusting the reminder time (Figure 9.A), robot eye colour, robot volume and touchscreen brightness (Figure 9.B).

Figure 9.A: Settings screen - reminder. Figure 9.B: Settings screen - robot customisation.

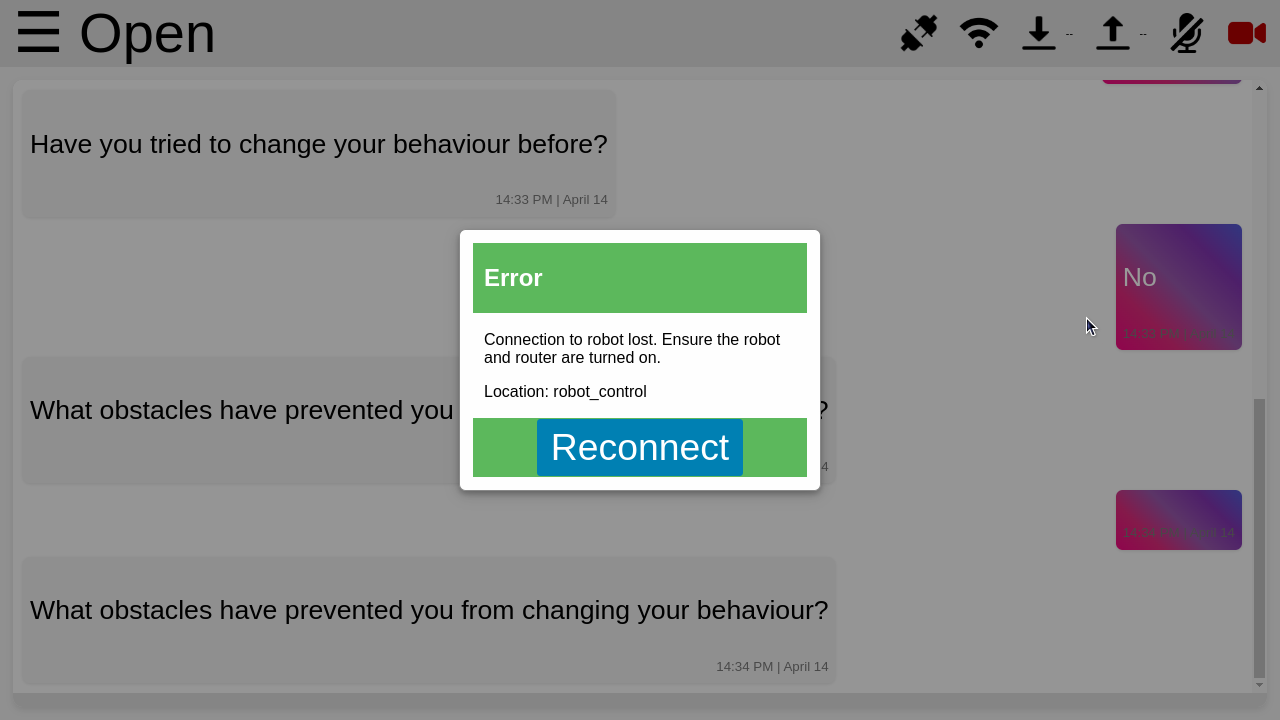

Error Handling

Given the long-term nature of the study, robust error management was essential. In the event of a fatal error, an error screen was displayed to inform the participant of the issue. This screen included a description of the error to support debugging and maintain system transparency (Figure 10).

Figure 10: Connection error message.

User Requirements

- Adaptive coaching strategy: Dynamic coaching based on ongoing analysis of feedback.

- Professional boundaries: Avoid psychological counselling; focus on behaviour change.

- Provide reminders and cues: Timely auditory/visual prompts for key tasks.

- Track progress and provide feedback: Monitor goal adherence and progress.

- Motivate and encourage: Adaptive motivational messaging.

- Make the process fun and engaging: Use gamification to increase adherence.

- Availability and accessibility: Proactive, engaging, and widely accessible robot.

- Support for autonomy and personal growth: Allow user-driven goal-setting and planning.

- Ethical data handling: Secure, lawful storage and handling of personal data.

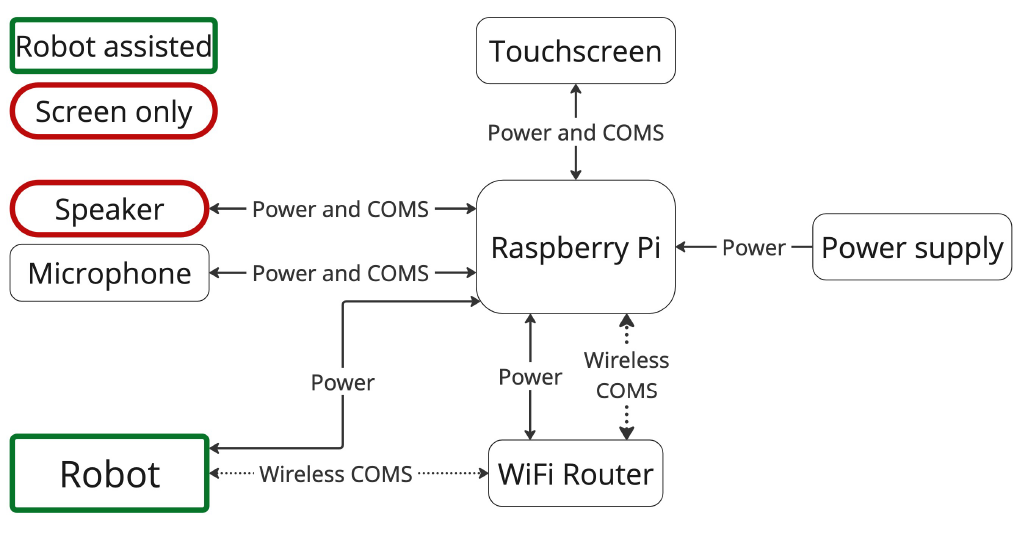

Hardware Selection

To support autonomous, real-world deployment in participants' homes, the system was built using off-the-shelf components for reliable performance and consistent operation over a 21-day study period. The setup consisted of the following six main components: a Vector 2.0 robot, a Raspberry Pi 5, a portable 4G Wi-Fi router, an external USB microphone, an external USB speaker, and a 5-inch touchscreen LCD. Figure 11 illustrates the component integration, with Figure 1 showing how participants where requested to position the hardware.

Figure 11: Hardware setup used for in-home deployment of the SAR system. The Raspberry Pi 5 served as the central controller, connected to a touchscreen (via the DSI connector), an external USB microphone, an external USB speaker, Vector Robot and a portable 4G Wi-Fi router, which established a local network for communicating with the Vector robot. All peripherals were powered via the Raspberry Pi's USB ports, and the system operated from a single USB-C 5A power supply.

Conclusion

This project explored how a socially assistive robot (SAR) could support healthy habit formation through daily interactions in a real-world, in-home setting. Over a three-week period, participants engaged with either a robot-assisted or screen-only system designed to promote physical activity via structured reminders and self-reflective check-ins.

The findings revealed that embodiment matters: participants in the robot-assisted condition reported stronger emotional engagement, greater perceived companionship, and a heightened sense of accountability. These relational effects translated into improved motivation and enjoyment compared to the screen-only group. However, both systems helped users reflect on their behaviours — highlighting the potential of structured, conversational coaching, even without embodiment.

At the same time, the study identified critical limitations. Technical issues like voice recognition errors and system instability negatively impacted user trust and long-term engagement. Participants also expressed a desire for more adaptive, personalised dialogue and greater flexibility in how reminders were delivered.

In response, this project contributed three new design requirements to guide future development:

- Conversational memory to support more adaptive, human-like interactions.

- Context-aware reminder systems that can operate across platforms.

- Robust error recovery mechanisms to maintain user trust during long-term use.

Overall, this research demonstrates the promise of SARs in promoting healthy behaviour change — but also underscores the need for reliable systems and thoughtful interaction design. By combining embodiment with adaptive, user-centred features, future socially assistive robots can become powerful allies in supporting long-term health and well-being.

Explore the Full Project

View the complete project on GitHub